Graphical user interface (GUI)

The graphical user interface (GUI /ɡuːiː/), is a type of user interface that allows users to interact with electronic devices through graphical icons and visual indicators such as secondary notation, instead of text-based user interfaces, typed command labels or text navigation. GUIs were introduced in reaction to the perceived steep learning curve of command-line interfaces (CLIs),[1][2][3] which require commands to be typed on a computer keyboard.

The actions in a GUI are usually performed through direct manipulation of the graphical elements. [4] Beyond computers, GUIs are used in many handheld mobile devices such as MP3 players, portable media players, gaming devices, smartphones and smaller household, office and industrial controls. The term GUI tends not to be applied to other lower-display resolution types of interfaces, such as video games (where head-up display (HUD) [5] is preferred), or not including flat screens, like volumetric displays [6] because the term is restricted to the scope of two-dimensional display screens able to describe generic information, in the tradition of the computer science research at the X Designing the visual composition and temporal behavior of a GUI is an important part of software application programming in the area of human–computer interaction. Its goal is to enhance the efficiency and ease of use for the underlying logical design of a stored program, a design discipline named usability. Methods of user-centered design are used to ensure that the visual language introduced in the design is well-tailored to the tasks.

User interface and interaction design:

The visible graphical interface features of an application are sometimes referred to as chrome or GUI (pronounced gooey).[7][8] Typically, users interact with information by manipulating visual widgets that allow for interactions appropriate to the kind of data they hold. The widgets of a well-designed interface are selected to support the actions necessary to achieve the goals of users. A model–view–controller allows a flexible structure in which the interface is independent from and indirectly linked to application functions, so the GUI can be customized easily. This allows users to select or design a different skin at will, and eases the designer's work to change the interface as user needs evolve. Good user interface design relates to users more, and to system architecture less.

Large widgets, such as windows, usually provide a frame or container for the main presentation content such as a web page, email message or drawing. Smaller ones usually act as a user-input tool.

A GUI may be designed for the requirements of a vertical market as application-specific graphical user interfaces. Examples include automated teller machines (ATM), point of sale (POS) touchscreens at restaurants,[9] self-service checkouts used in a retail store, airline self-ticketing and check-in, information kiosks in a public space, like a train station or a museum, and monitors or control screens in an embedded industrial application which employ a real-time operating system (RTOS).

Componentes:

A GUI uses a combination of technologies and devices to provide a platform that users can interact with, for the tasks of gathering and producing information.

A series of elements conforming a visual language have evolved to represent information stored in computers. This makes it easier for people with few computer skills to work with and use computer software. The most common combination of such elements in GUIs is the windows, icons, menus, pointer (WIMP) paradigm, especially in personal computers.

The WIMP style of interaction uses a virtual input device to represent the position of a pointing device, most often a mouse, and presents information organized in windows and represented with icons. Available commands are compiled together in menus, and actions are performed making gestures with the pointing device. A window manager facilitates the interactions between windows, applications, and the windowing system. The windowing system handles hardware devices such as pointing devices, graphics hardware, and positioning of the pointer.

In personal computers, all these elements are modeled through a desktop metaphor to produce a simulation called a desktop environment in which the display represents a desktop, on which documents and folders of documents can be placed. Window managers and other software combine to simulate the desktop environment with varying degrees of realism.

Historia de (GUI):

Ivan Sutherland developed Sketchpad in 1963, widely held as the first graphical computer-aided design program. It used a light pen to create and manipulate objects in engineering drawings in realtime with coordinated graphics. In the late 1960s, researchers at the Stanford Research Institute, led by Douglas Engelbart, developed the On-Line System (NLS), which used text-based hyperlinks manipulated with a then new device: the mouse. In the 1970s, Engelbart's ideas were further refined and extended to graphics by researchers at Xerox PARC and specifically Alan Kay, who went beyond text-based hyperlinks and used a GUI as the main interface for the Xerox Alto computer, released in 1973. Most modern general-purpose GUIs are derived from this system.

The Xerox Star 8010 workstation introduced the first commercial GUI.

The Xerox PARC user interface consisted of graphical elements such as windows, menus, radio buttons, and check boxes. The concept of icons was later introduced by David Canfield Smith, who had written a thesis on the subject under the guidance of Kay.[12][13][14] The PARC user interface employs a pointing device along with a keyboard. These aspects can be emphasized by using the alternative term and acronym for windows, icons, menus, pointing device (WIMP). This effort culminated in the 1973 Xerox Alto, the first computer with a GUI, though the system never reached commercial production.

The first commercially available computer with a GUI was the 1979 PERQ workstation, manufactured by Three Rivers Computer Corporation. In 1981, Xerox eventually commercialized the Alto in the form of a new and enhanced system – the Xerox 8010 Information System – more commonly known as the Xerox Star.[15][16] These early systems spurred many other GUI efforts, including the Apple Lisa (which presented the concept of menu bar and window controls) in 1983, the Apple Macintosh 128K in 1984, and the Atari ST with Digital Research's GEM, and Commodore Amiga in 1985. Visi On was released in 1983 for the IBM PC compatible computers, but was never popular due to its high hardware demands.[17] Nevertheless, it was a crucial influence on the contemporary development of Microsoft Windows.[18]

Apple, Digital Research, IBM and Microsoft used many of Xerox's ideas to develop products, and IBM's Common User Access specifications formed the basis of the user interfaces used in Microsoft Windows, IBM OS/2 Presentation Manager, and the Unix Motif toolkit and window manager. These ideas evolved to create the interface found in current versions of Microsoft Windows, and in various desktop environments for Unix-like operating systems, such as macOS and Linux. Thus most current GUIs have largely common idioms.

Sistemas operativos que utiliza Graphical user interface (GUI):

- X-Window

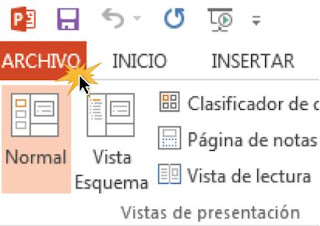

- Windows

- Mac OS X

- Aqua

- Apple Macintosh

Glosario:

AJAX:

Arquitectura de la información: (AI) es la disciplina y arte encargada del estudio, análisis, organización, disposición y estructuración de la información en espacios de información, y de la selección y presentación de los datos en los sistemas de información interactivos y no interactivos.

Biblioteca de enlace dinámico: o más comúnmente DLL (sigla en inglés de dynamic-link library) es el término con el que se refiere a los archivos con código ejecutable que se cargan bajo demanda de un programa por parte del sistema operativo.

Ingeniería de software: es la aplicación de un enfoque sistemático, disciplinado y cuantificable al desarrollo, operación, y mantenimiento del software.

Interfaz: Dispositivo capaz de transformar las señales generadas por un aparato en señales comprensibles por otro.

Interfaz de usuario: Podemos comenzar diciendo que es el medio por el que una persona controla una aplicación de software o un dispositivo de hardware. Una buena interfaz de usuarioproporciona una experiencia “fácil de usar”, que permite a una persona interactuar con el software o hardware de una manera natural e intuitiva.

Interacción persona-computadora: Todavía no hay una definición concreta para el conjunto de conceptos que forman el área de la interacción persona-computadora o interacción persona-ordenador (IPO). En términos generales, es la disciplina que estudia el intercambio de información mediante software entre las personas y las computadoras.

Interfaz de línea de comandos (CLI): es un método que permite a los usuarios dar instrucciones a algún programa informático por medio de una línea de texto simple.

Ley de Fitts: expresa que el tiempo para llegar a un objetivo (visual) es una función de la distancia a dicho objetivo y su tamaño. En otras palabras: El tiempo que se requiere para alcanzar a pulsar un objetivo depende de una relación logarítmica entre su superficie y la distancia a la que se encuentra.

Look and feel: (con el significado de "aspecto y tacto") es una metáfora utilizada dentro del entorno de marketing para poder dar una imagen única a los productos, incluyendo áreas como el diseño exterior, trade dress, la caja en que se entrega al cliente, etc ...

No hay comentarios:

Publicar un comentario